|

|

|

|

|

| Preparing to shut down |

| Posted on Friday, April 29, 2022 at 05:15:29 PM | -doNka- |

|

| |

After a second life planet-rtcw is again going to shut down.

Hosting is expensive and maintenance is a bother. Old php stack and app are vulnerable to all kinds of sqli/email attacks that it's just not worth it.

Bots are rampant and hackers are plentiful. Internet is a bad place to host well, pretty much anything.

I will migrate a few features like client stats and server-on-demand to stats.rtcwpro.com, but for now I'm just putting this baby to sleep once again. |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| Closing 2 years of client stats! |

| Posted on Wednesday, January 19, 2022 at 07:15:30 AM | -doNka- |

|

| |

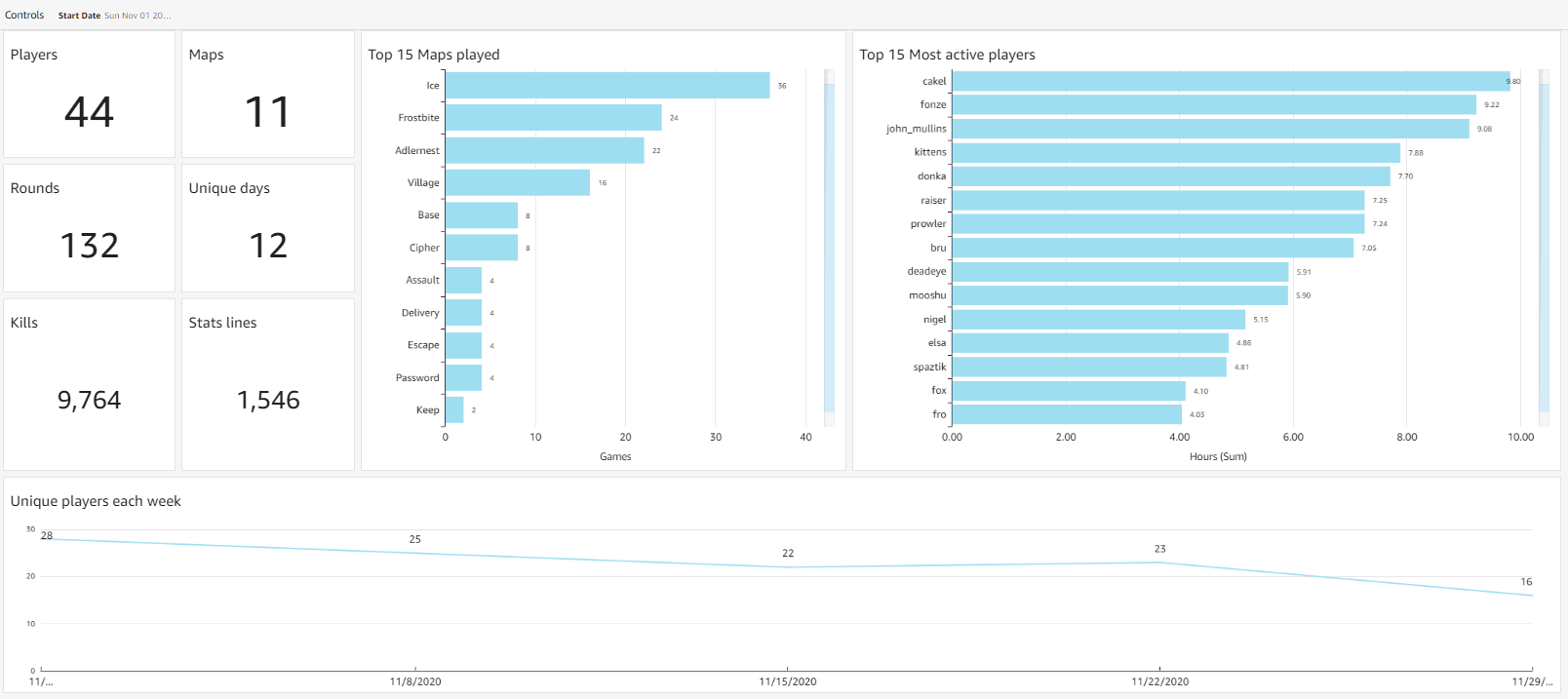

A pet project for learning python and pandas while processing RTCW stats started in 2018. Lots of doubt went into who and why and what value it will provide to a community of 17 people that have already stats processors, but there was a vision. It was modern data science techniques applied to RTCW with more detailed, rich, more insightful data extraction and analysis. The next level was taking two games together and aggregating the results.

5000 executions later, one can say the adoption went well. 1.3G client stats submitted, 24 seasons aggregated in NA, ELOs, fun stats of every kind.

For the record, here are December 2021 stats:

https://stats.donkanator.com/endseason/stats-2021Dec.html

Now onto the exciting part - summary for the last 2! years! I have aggregated every single recorded game and made the final dataset of 105,000 matches public, forever.

Here are some insights I have put together:

https://stats.donkanator.com/endseason/RTCW+Yearly+Analysis.html

And if you are into that kind of thing - datasets, analytics, etc, you can run the provided Jupiter notebook here

https://stats.donkanator.com/endseason/RTCW+Yearly+Analysis.ipynb

The next frontier is https://stats.rtcwpro.com/ where stats are automatically submitted by all participating RTCWPro servers to the central repository. The automation makes this whole process a lot more fun, robust(after all bugs are out) and open to new possibilities. |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (1)

.:Back to Top:. |

|

|

|

| Catching up on 2021 stats |

| Posted on Saturday, December 11, 2021 at 09:40:49 PM | -doNka- |

|

| |

Most of my time is going to RTCW Pro stats - https://stats.rtcwpro.com/leaders

It's an exciting data flow where all RTCWPro servers around the world submit stats automatically to a central processing location. Match records are stored, processed, and made available within seconds. This is the next frontier of RTCW stats and it needs more priority/time than older client files.

To keep the promise, here are the stats for 2021 August-November. I will promptly close December when the month is over and will probably not have any more client-based seasons. There are no plans to take down (maybe refactor to a cheaper solution) old stats upload functionality at this time.

Enjoy:

August 2021

September 2021

October 2021

November 2021 |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| Community project for RTCWPro awards |

| Posted on Tuesday, November 16, 2021 at 09:58:39 PM | -doNka- |

|

| |

World RTCW players, I'd like to invite you to contribute to the current RTCWPro stats project https://stats.rtcwpro.com/

I need help right now with your ingenuity and coding to process game events and produce award insights.

Task: provided a python array of RTCWPro events (kills, etc) you need to produce a match award like "Top Killer" or "Backstabber"

How to get started?

1. Copy everything in this folder to your PC and run just to test.

-- https://github.com/donkz/rtcwprostats/tree/master/test/gamelog_process

2. Dig into the code and comments to see what is the test setup and actual code

3. Write your own class and plug it in where comments are!

-- Be nice and do some good tests and encapsulate your code safely into try/catch clauses.

4. Pass the code back to me and i will work to bring it into common repository.

Comments are welcome!

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| RTCW Servers OnDemand |

| Posted on Sunday, July 18, 2021 at 07:31:57 AM | -doNka- |

|

| |

I'm rolling out a new RTCW server hosting solution!

Anyone can request an RTCW Pro server on demand by a click of a button. All you have to do is to head over to Server OnDemand link and request one in a region next to you.

It takes about 2 minutes for the server to provision and depending on a region, the address always will be:

na.donkanator.com (Virginia)

sa.donkanator.com (Sao Paolo)

eu.donkanator.com (London)

The template for the server is a container maintained by msh100 - https://github.com/msh100/rtcw

BTW , this guy deserves major recognition for the amount of work he put in. His RTCWPro container provides a amazingly flexible option for RTCW server hosting. |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

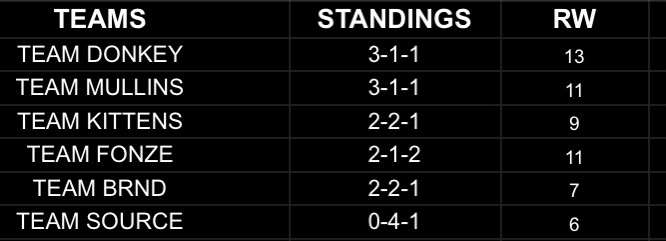

| RTCW 2021 Spring Fling Tournament - 6v6 - Completed. |

| Posted on Thursday, May 20, 2021 at 07:38:47 PM | -doNka- |

|

| |

Post by Raiser

Thanks to the cup admins Virus, Cypher, Gut, Eternal, Source, Spaztik, Cak and Raiser for planning and hosting an unbelievable exciting two day tournament. Thanks to all the cup participants ended up being 6 teams of 6; around 42 starters. Shoutout to the casters MakL, Ryan and Fonze for streaming as well as following the games; Well done. We look forward to seeing everyone again for the next cup; 2021 RTCW Pro Spring Fling is officially over.

Edit: Special thanks to RTCWPRO contributor team https://github.com/rtcwmp-com/rtcwPro/graphs/contributors

FINAL STANDINGS:

1st Place - Team Donkey - sDk - Still Don’t Know

2nd Place - Team Mullins - Redue - Redue Draft

3rd Place - Team Fonze/Team Kittens - ehhhh/8===D

5th Place - Team Brandon - T$T - The Money Team

6th Place - Team Source - [z] - Sleep

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| 2021 RTCWPro Spring Fling servers |

| Posted on Saturday, April 24, 2021 at 02:54:07 AM | -doNka- |

|

| |

These are the servers for the tournament, please first check with admins if there's any doubt.

t1.donkanator.com resolves to 54.173.77.70

t2.donkanator.com resolves to 34.201.58.197

/connect t1.donkanator.com;password cup2021

/connect t2.donkanator.com;password cup2021

Both servers have RTCW on default port 27960 and additional 27962

For those far far away from US/Virginia region - Europe, SA, Australia, try the following addresses to the same servers. They have enhanced networking enabled and could have lower ping and less jitter

eu.t1.donkanator.com

eu.t2.donkanator.com

https://www.youtube.com/watch?v=GAxrPQ3ycsQ&t=1s

For the good citizens that help retain stats

PLEASE SUBMIT STATS WHILE SELECTING REGION: NA and MATCH TYPE: Event/Tournament

?page=upload_stats

Reminder: to collect stats you need to set logfile 1, I have a windows shortcut like this for example:

"D:GamesReturn to Castle WolfensteinWolfMP.exe" +set fs_game rtcwpro +connect rtcwpro.donkanator.com +exec myfavoriteconfig.cfg +logfile 1

Additionally , you can use these URLs as server browsers

http://t1.donkanator.com/

http://t1.donkanator.com/27962/

http://t2.donkanator.com/

http://t2.donkanator.com/27962/ |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| November Season Closing |

| Posted on Monday, November 30, 2020 at 10:23:30 PM | -doNka- |

|

| |

Another season came to an end and although this may look a little slimmer - much more exciting things are happening.

RTCW is getting a new mod - RTCWPro in place of OSP that served well for 18 years, but lost support almost as long ago. RTCWPro is still in very active development, but the NA scene is putting it to a good test. The stats processor was out of tune with the new client and vice versa, so quite a few stats simply went unrecorded or unreported. There's still plenty of activity to go around.

Here are the November 2020 stats. Congrats to people picking up some medals!

November results

November ELO

Health check!

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| August season closing |

| Posted on Wednesday, September 2, 2020 at 10:01:09 PM | -doNka- |

|

| |

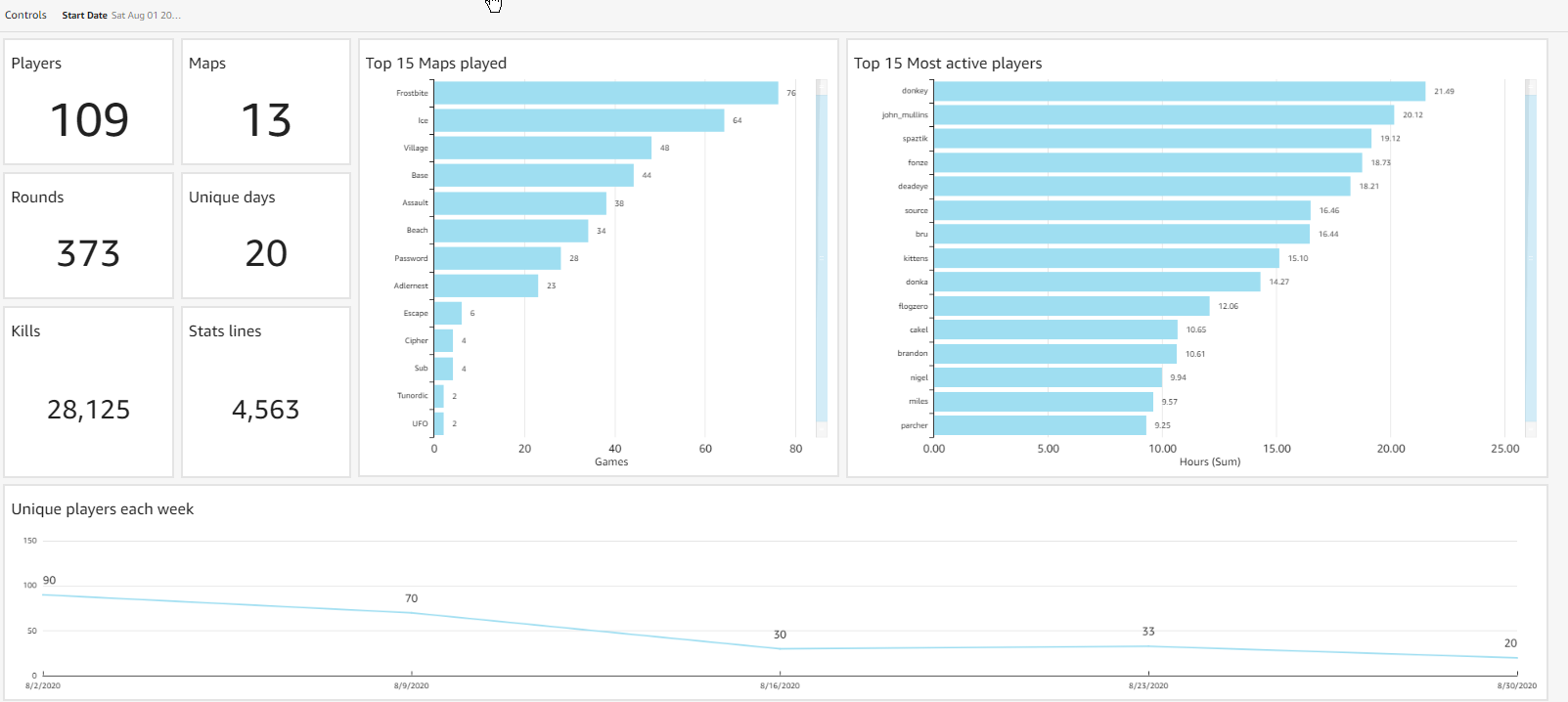

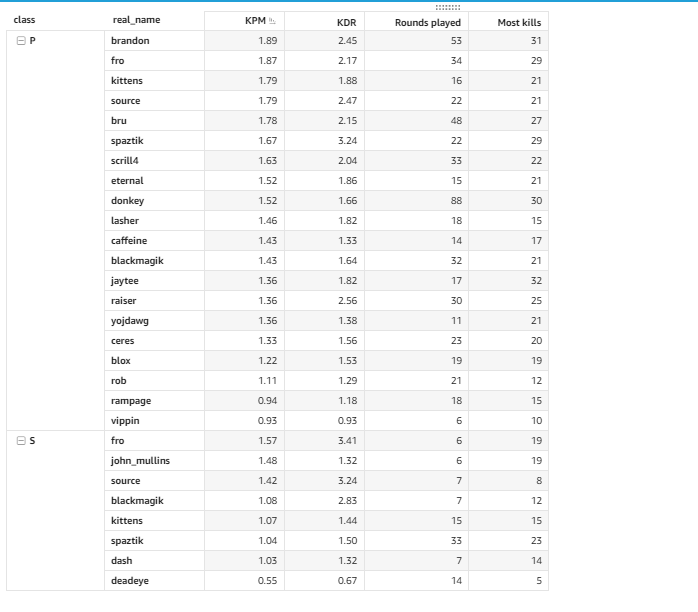

August was an interesting month - quakecon hype, most scrims since 2004, lots of PUGs and of course large numbers of new players in pubs on Thursdays/Sundays. Some players are taking time off after the QuakeCon burnout, but our numbers of regular players are stronger than ever!

These are the final stats for the season:

https://stats.donkanator.com/endseason/stats-2020Aug.html

Elo curves are coming soon!

Congratulations to the season winners!

1. Murkey

2. Source

3. Parcher

Health check - pay attention to the first week of August!

and panzer/sniper stats

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| Quakecon 2020 Final stats |

| Posted on Wednesday, August 12, 2020 at 09:59:52 PM | -doNka- |

|

| |

Congrats to all winners:

Invite + Playoffs

flagrant - (C)Warrior, Brandon,Source,Kittens,Bru,Nigel,DeadEye,Knifey

blatant - (C)Eternal,cKy,HotDamn (Elusive),Flogzero,Murkey,Candy,Donkey,Crono

3rd place decider is Thursday 9:30 EST 8/13/2020

https://s3.amazonaws.com/donkanator.com/stats/endseason/Quakecon2020-invite.html

Open winners - Get Stompled - (C)Conscious,Spaztik,Blackmagic,Dresserwood,Fonze,Pixi,Cak-el,Siluro

https://s3.amazonaws.com/donkanator.com/stats/endseason/Quakecon2020-open.html

Available casts:

https://www.youtube.com/playlist?list=PL9SMHmlI4wfCkMqaMufAoD0frk8wA8ez0 |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (1)

.:Back to Top:. |

|

|

|

| Raiser retirement #57 |

| Posted on Tuesday, July 14, 2020 at 02:20:45 PM | -doNka- |

|

| |

Raiser is close to beating Brett Favre with his retirement drama, but this one we are ready to take very seriously:

I just wanted to say guys I have enjoyed playing with everyone it was nice actually coming back and playing RTCW the past four years but after being banned from the NA discord a month ago for a ridiculous reason I thought that would be a long enough punishment in which the admins thought different deciding to double down and keep me and h2o banned. Thank you to the Euro community for welcoming and allowing me to play in the gathers while I didn’t have a job for those two months it was a very stressful time for me back then but being able get away from the real world and play with you guys so competitively it was alot of fun. It’s time for me to uninstall and walk away from this game. I don’t agree with holding these bans from both communities and I can guarantee you I am not the only one who’s thinking it. I had a couple of guys message me asking to join their team for qcon too but it looks like that’s not gonna be able to happen. Good luck to everyone I’ll miss both the NA and the Euro commmuity except for Caff, Nigel and Miles.

Shoutout to the NA original admin crew Virus, Gut, Cypher for organizing all the tournaments the last four years. Eternal - Hanging in there with everything that happened in the NA community always having my back trying to keep me in the community. I’m sorry I wasn’t able to control how I acted in these discord channels it’s a lot harder for me than you think.

Last but not least the Euro admins for everything they did to try and organize with the NA admins to make this qcon event happen it breaks my heart I will not be able to participate and this is why I need to move on.

Take care everyone.

Fr4gster, Sneaky, Raiser 2002-2006 - Raiser 2016-2020

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| QuakeCon 2020 at Home! |

| Posted on Friday, July 10, 2020 at 06:33:56 PM | -doNka- |

|

| |

AUGUST 7-9, 2020

THIS YEAR QUAKECON WILL TAKE PLACE IN THE CONVENIENCE OF YOUR OWN HOME.

Virus047: Yup yup!

[9:44 AM] Virus047: Qcon approached myself and gut about two months ago with discussions to begin planning this.

[9:44 AM] Virus047: Launched the news yesterday! :slight_smile:

[9:46 AM] Virus047: This definitely is legit and 100 percent supported by Quakecon and Bethesda.

https://www.rtcw.live/home |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| Updated versions of Cipher and Nordic are now available! |

| Posted on Thursday, June 25, 2020 at 04:01:02 PM | -doNka- |

|

| |

(from source)

Here are some of the major changes:

Cipher (B2)

- added ladder clip to the sides of the river (and raised the water level) so people can get out easier

- resized and restructured the lower complex to make it more straight forward and scalable for 3v3

- removed explodable barrels for stability

- various textural and staging upgrades to improve visual clarity

https://s3.amazonaws.com/donkanator.com/rtcw_maps/te_cipher_b2.pk3

Nordic (B2)

- fixed bug that made it possible to hear sounds from distant areas

- fixed bug where destroyable grates remained after being broken with non-explosive weapons

- fixed bug that made fences solid/bulletproof

- added drop-down from lower axis spawn to emergency access tunnel / planning room

- added more initial spawn points at the north turret

- added outside entrance to office balcony ladder

- removed explodable barrels for stability

- restructured the office/warehouse area to make it more straight-forward

https://s3.amazonaws.com/donkanator.com/rtcw_maps/te_nordic_b2.pk3

For both maps, I also standardized the objective flags so that the primary objective (documents) is the at the top, and the forward spawn flag is the bottom. Sometimes the announcement that the objective taken doesn't get heard, and in that case this should make it easier for people to determine if the primary objective is in play.

Thanks to all those who provided useful feedback. Please download these maps before you join the pug as we will be playing them starting 6/25/2020 |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| Corona Season 3 Results!! |

| Posted on Monday, June 1, 2020 at 03:51:13 AM | -doNka- |

|

| |

Corona season 3 is over!

We had another great month with 53 unique players and 30K pug kills. This month had seen increasing amount of smaller, more constant pickup games 3v3, 4v4 mid-week games that we agreed not to include in general PUG season.

Some improvements to stats:

-Best friends stats - see pairs of players that win together the most

-Weapon signatures - changed weapon kills to weapon percentages. Kills are pretty meaningless with people playing various amounts of time. Percentages, on the other side, can help you benchmark player styles against each other

-Other percentage base fields have better sorting.

Move onto the results:

https://s3.amazonaws.com/donkanator.com/stats/2020-05-31-May-Corona3.html

Thanks for all participants, active and casual, and congratulations to the winners!!! |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| Decka Remorial |

| Posted on Thursday, May 14, 2020 at 03:32:47 AM | -doNka- |

|

| |

eternal 05/11/2020

@everyone Decka passed away on Saturday evening. All the details are not yet known, but he was recently released from the hospital following surgery. While he hadn't participated in this community recently, Decka has been a regular character in these parts for the past two decades. He was a champion, and a great person behind his sometimes prickly exterior. I am at a loss for what to say, but he will be missed.

Our next pug night is Thursday 5/14/2020. Let's get some games going to remember a good player and a teammate.

Get on discord https://discord.gg/wJqBDsT

Get on RTCW/OSP: decka.donkanator.com

If you need to install RTCW, look for fully packed installation in the #reinstallation-and-files discord channel https://discord.com/channels/272106365307060225/419127457497612298

Run wolf as admin, generate and change new CD key (otherwise you will collide with others)

Join the server for a few minutes ahead of time to make sure your config and punkbuster work.

..we start around 9PM EST |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| Corona Season 2 Results!! |

| Posted on Friday, May 1, 2020 at 05:45:00 AM | -doNka- |

|

| |

April brought even bigger numbers, more games, and new names than the previous record reason. We are dealing with splitting teams into 4 teams into different servers!

This month I enabled web upload for rtcwconsole.log files straight from the users and it’s been getting a lot of traction in the NA community as well as the EU. Please check out the “upload stats” link on the left navigation bar.

I have also coded a major overhaul to some metrics:

-New Tapout penalty for suiciding too much. It offsets high KDR for the panzers that vape a lot and /kill to boost KDR. It’s far from all malicious, but it’s a justified penalty to offset KDR.

-Megakills, Killstreaks, and Win% are awarded in ranges, instead of rankings. For example, anyone over 20 Killstreak gets the best rank, over 15 - second, etc.

-Panz penalty was reduced to 3 because Tapout is doing a good job to penalize all the vapes.

-Smoke kills penalty is 0-2 (one less).

-New ELO calculation that takes into account all players' games since January 1. It ranks by Kill performance less/more points for wins. Special weapons kills worth 50%.

-Add many countless bugs, styles, and time improvements!

Congratulations to season winners!!!

Anyone who plays on open nights and plays over 40 games is ranked.

Now head to the stats to see FINAL RESULTS

https://s3.amazonaws.com/donkanator.com/stats/stats-2020April.html

Individual night stats for the month:

(coming up)

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| Follow first 2020 cup! |

| Posted on Monday, February 3, 2020 at 09:02:52 PM | -doNka- |

|

| |

Cliffdark organized an European weekly tournament that has a few North American Teams to follow!

https://www.crossfire.nu/news/9137/rtcw-6v6-2020-signups-closed

Deadeyes Huckleberry Service DHS:

Virus047 (c), Reker, Nigel, Brujah, Kittens, Tragic, Playa, Cypher, DeadEye, Fox, Jaytee

Trinity:

spaztik (c), festus, nihilist, wangofpain, lath, vodka, c@k-el, flogzero, anom, prowler

N/A

Eternal (c), cKy, End, Raiser, brandon, Sem, Donka, Machine, Booty, Donkey

Also parcher and murkey are on ROZ:

h2o (c), mini, raw, murkey, kylie, vis, parcher |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (0)

.:Back to Top:. |

|

|

|

| Update: RTCW2 and RTCW: The Movie |

| Posted on Thursday, December 13, 2007 at 07:18:51 PM | gisele |

|

| |

Just in case anyone missed the Quakecon 2007 update, I figured I'd relay what I've learned to everyone here!

New Wolfenstein Just Announced!

id Software�s CEO Todd Hollenshead announced on Friday, August 3rd, 2007 at QuakeCon that Threewave Software is working on the new Wolfenstein game for the following platforms: PS3, Xbox 360 and PC. Threewave Software is working in conjunction with Raven Software on the title. Read more... |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (37)

.:Back to Top:. |

|

|

|

| Weekly OSP / TF2 Team Assembly |

| Posted on Monday, October 15, 2007 at 06:50:20 PM | BYE|ralphtehnader |

|

| |

Hope to see you all again on Wednesday around 8pm for some OSP gaming. The vent info is still the same.

After playing OSP for about an hour or so, let's play some TF2 for the remainder of the evening. I concur with the TF2 players on here that we should put together a team. I would be willing to host a server if there's enough interest to develop strats and pub on home turf on a 18 player server or so.

So far we have:

-Ralph

-Donka

-Juventus

-Ding

-w0rd (Papi Chulo)

-Bullz

-Paco's Gun

-BigshoT

-Snappas

-playa

-CaseyJones

-Spiza

-Tyson

-be4st

Anyone else interested?

If so:

http://www.totalgamingleague.com/team/Triad

join pw: vodka

Edit: As for the team name, some have suggested Team RtCW, which is cool. I was thinking of Team Wolfplayer or sticking with the classic BYE| = Bomb Your Enemies to go with the spam :D |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (84)

.:Back to Top:. |

|

|

|

| TF2 4 Newbs Tonight? |

| Posted on Tuesday, October 9, 2007 at 03:42:51 PM | BYE|ralphtehnader |

|

| |

Hey Folks,

It's been a while since I've been excited about a new game, and with Donka talking up TF2 and Orange Box arriving in the mail, I'd like to get some peeps on vent tonight to go over the fundamentals, and give it the old college try.

And if you don't have a copy of TF2 yet -- it's 5-games in one Box, for $45 bucks. Awesome deal.

http://www.ebgames.com/product.asp?product_id=646931

You can also order via Steam I'm told.

If you'd like, stop by at 8pm EST:

Vent Server: ventrilo4.va.powervs.com:5066

Password: bcdcrules

I'll make a TF2 channel, and maybe we can hop on ECGN afterwards for some comparing/contrasting.

Rock on,

Bill |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (51)

.:Back to Top:. |

|

|

|

| eXtinction 3v3 Tournament |

| Posted on Monday, October 1, 2007 at 04:53:04 AM | eX||hosey |

|

| |

eXtinction 3v3 Tournament!

This 3v3 double elimination RtCW 1.41 OSP tournament has been put together by a group of players that would like to see the OSP community get one last competitive run in this great game! It has been in the making for about one month now we thought it is about time we announce it to the public.

This tournament has been fully thought out and has everything almost ready for sign ups to be up.

Prizes

______

1st Place: Black Icemat Mousepad, Logitech G7 Laser Gaming Mouse, Logitech G15 Gaming Keyboard

2nd Place: Black Icemat Mousepad, Logitech Precision PC Gaming Headset

Sign ups and website containing Rules and everything you need to know will be posted shortly.

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (53)

.:Back to Top:. |

|

|

|

| What's your dm_base of the future? |

| Posted on Sunday, September 9, 2007 at 08:11:23 PM | MUST B H4X |

|

| |

So... RTCW is bleeding out dead and there are some interesting games comming out rather soon (which means long before RTCW2 will ever come out). I would like to know what games the RTCW community (of the past and present) is planning to move on to or at the very least is looking forward to. Are there any former RTCW teams that are planning to head to another game and stay as a team? What are you guys looking forward to and why?

I know that personally I'm looking forward to Crysis because of the insane engine and the way that you customize the style of play. I'm also looking forward to Call of Duty 4 as far as competition goes, because it looks like (for the most part) it would be a good competition game. Haze is a game that looks like it may have a good single player. The game Severity that is being developed for the CPL for obvious competition reasons... as well as StarCraft II because of the replayability of a good RTS.

No need to say RTCW2.

(p.s. anyone know where invisi has gone? :p) |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (99)

.:Back to Top:. |

|

|

|

| RTCW OSP 3v3 Tournament |

| Posted on Thursday, August 23, 2007 at 02:23:51 AM | cr//st33la |

|

| |

Over the past few weeks myself and a few others have been working on throwing a RTCW OSP 3v3 Tournament. Before ordering servers, and posting the sign up page, we would like some feedback on the idea from the OSP community. So we know if it was a waste of time or if teams will sign up and participate. There is still a bit of work that needs to be done but if we get the feedback we hope we do sign ups will be available shortly.

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (73)

.:Back to Top:. |

|

|

|

| Gang Kidnaps Top Gamer to Get His Password |

| Posted on Saturday, July 21, 2007 at 08:57:21 PM | -doNka- |

|

| |

An armed gang of four kidnapped one of the world's top RPG gamers after one criminal's girlfriend lured him into a fake date using Orkut, Google's social network. After sequestering him in Sao Paulo, they held a gun against the victim's head for five hours to get his password, which they wanted to sell for $8,000. And yes, the story gets even better.

Link to article |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (46)

.:Back to Top:. |

|

|

|

| RTCW2 exists? |

| Posted on Friday, July 20, 2007 at 08:16:59 PM | FuZioN)nG( |

|

| |

Small mentions of RTCW 2 in an article over @ IGN

"IGN: So what's going on with id right now?

Tim Willits: We have a lot of stuff going on right now. You're here to see Quake Wars [check out that preview here] so I won't go into that right now. We're working with Raven on Wolfenstein right now and we'll have a little more to talk about with that at QuakeCon. We're here talking to potential licensees about id Tech 5, which is the structure of our new title being developed internally."

Link: http://pc.ign.com/articles/804/804112p1.html

Someone going to Quakecon, please fill us in. And pictures too if we're that lucky ;)

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (29)

.:Back to Top:. |

|

|

|

| QuakeCon Registration |

| Posted on Tuesday, July 17, 2007 at 06:15:15 PM | -doNka- |

|

| |

To whom it may concern:

TOURNAMENT REGISTRATION WILL BEGIN AT QUAKECON.ORG ON

Wednesday July 18th @ 6:00 PM CDT

Games/Prizes:

Prize money for ET:QW 6v6:

1st: $22,000

2nd: $16,000

3rd: $8,000

4th: $4,000

Prize money for Quad-Damage(all versions of quake) 1v1:

1st: $20,000

2nd: $12,500

3rd: $7,500

4th: $5,000

5th/8th: $1,250 |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (23)

.:Back to Top:. |

|

|

|

| WTV Sunday 7/15/07! |

| Posted on Saturday, July 14, 2007 at 07:18:03 PM | FuZioN)nG( |

|

| |

Get your first peek at Team America!

Sunday we take on  #not.rtcw -- with recognizable former euro stars such as Creamy, Ogdoad, and Yilider from 4kings, Ramzi from GMPO, and Artan from ECGN 24/7 Beach ;p #not.rtcw -- with recognizable former euro stars such as Creamy, Ogdoad, and Yilider from 4kings, Ramzi from GMPO, and Artan from ECGN 24/7 Beach ;p

MAPS: TE_ESCAPE2; MP_VILLAGE (both maps abba on each)

WTV INFO: /connect 62.75.221.160:27990

TIME: 3eastern/2central/21:00euro

Expected  TEAM AMERICA Lineup: TEAM AMERICA Lineup:

elusive

FuZioN

Juventus

mutha``

newt``

snappas

Each map will be played on a seperate server. One map european and one map american. Come support us :)

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (33)

.:Back to Top:. |

|

|

|

| OSP Pub Night This Thursday. |

| Posted on Monday, July 2, 2007 at 03:32:11 PM | -doNka- |

|

| |

It's time to stop beating around the bush and put your mouth where the balls are(Dodgeball). The ideas and requests are flying around to get the OSP night going on. We've seen a few suggestions for both, a pug or a pub night. Let us start with a pub night to see how many people we can gather on a given night. I'm sure with what we have right now we can easily fill up a 16-20 slots server and get some good old wolf OSP action going like the old times. It's time to stop beating around the bush and put your mouth where the balls are(Dodgeball). The ideas and requests are flying around to get the OSP night going on. We've seen a few suggestions for both, a pug or a pub night. Let us start with a pub night to see how many people we can gather on a given night. I'm sure with what we have right now we can easily fill up a 16-20 slots server and get some good old wolf OSP action going like the old times.

OSP Server IP: 66.254.117.183:27960 }-{eaven's Gate by The BirdZ 333 FPS!!!!! (CHI, needs rotation)

I'm sure most of the people would like to join some vent to chat, so we need a Vent info as well.

Vent Server IP: TBA

OSP pub night Thursday July 5th 9EST

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (109)

.:Back to Top:. |

|

|

|

| Article: RTCW Competition History |

| Posted on Friday, June 22, 2007 at 07:44:27 PM | -doNka- |

|

| |

Believe it or not, some people don't even know that article column exists, so there's a need for this post.

Karpov & Co spent quite a bit of time lining up the most detailed history record of RTCW competitions and major dramatic events that followed. Besides actual events you will find some scores, rosters, champs, and comentaries by those who could remember their lives behind the monitor 5+ years back. The whole thing came out to be pretty lenghty, but read it anyway, you will be entertained.

Artice: RTCW Competition History

Link with colors: DOWNLOAD NOW!

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (73)

.:Back to Top:. |

|

|

|

| So far has ET:QW met up to your expectations? |

| Posted on Thursday, June 21, 2007 at 05:31:11 PM | hollywood |

|

| |

Splash Damage opened up the Enemy Territory Quake Wars beta to the public yesterday. Currently only fileplanet subscribers (have to pay $) can only test out the beta. In the next couple of days it should be open up to non file planet subscribers (free) but you will not be guaranteed an etqw beta key. There will only be 60,000 slots. If you are not in the beta already, there is close to 1,000 people playing online on about 140 servers across the US, Australia and Europe.

Splash Damage had made it clear that this is a beta version and not a demo. Many people on various forums that have played many previous team based games are already calling it a failure.

http://www.hardforum.com/showthread.php?p=1031191446&posted=1#post1031191446

If you are in the ET:QW beta please post your feedback.

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (60)

.:Back to Top:. |

|

|

|

| id Software |

| Posted on Wednesday, June 13, 2007 at 09:42:12 PM | [+ oo] rampage |

|

| |

Just a follow up from the already well known "secret" engine that id has been working on. It has finally been put to video and shown for the first time to the public. I couldn't get a better link other than a download link so if anyone can find one please post it.

http://www.gametrailers.com/downloadnew.php?id=20463&type=wmv

You could also go to www.gametrailers.com and view it there.

10:14AM - "So the last couple of years at iD we've been working in secrecy on next-gen tech and a game for it... this is the first time we're showing anything we've done on it publicly." iD Tech 5... "What we've got here is the entire world with unique textures, 20GB of textures covering this track. They can go in and look at the world and, say, change the color of the mountaintop, or carve their name into the rock. They can change as much as they want on surfaces with no impact on the game."

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (16)

.:Back to Top:. |

|

|

|

| RTCW Cup announced! |

| Posted on Sunday, June 3, 2007 at 06:53:10 PM | X-tra |

|

| |

Im proud to present You the Burner RTCW Cup powered by PowerMauerClanGermany.

Do you also wallow in old memories?

Showtime from PMCG got a great idea to bring back these old memories!

Let�s start a 6on6 RTCW Cup in hopes to see some nice matches in WTV and have some fun.

You can signup your team till 17.06.07 here: http://www.pmcgclan.de/rtcwcup/

I hope we'll receive a lot of sign-ups and it will be a great event!

This cup is covered by WTV and Shoutcast.

Cup Informations:

� Mode: 6on6

� RTCW Version : 1.41b

� Rtcw OSP Version: 0.9

Mappool:

mp_beach

mp_base

mp_ice

mp_village

mp_aussault

te_frostibte

Additionally the Community can vote for 2 more Maps - check the Cupsite.

I hope we will have another enjoyable event, the signups have been openend, good luck & have fun to everyone.

#burner-cup.rtcw @ Quakenet

RTCW Cup |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (79)

.:Back to Top:. |

|

|

|

| Ralph Would Love Your Help... |

| Posted on Friday, June 1, 2007 at 07:49:49 AM | BYE|ralphtehnader |

|

| |

It's been a while since The Hammer interviewed me on P-RtCW, but I wanted to give you all the heads up that I'm still making music with my band, and we recently were nominated for 7 awards in the Hartford Advocate Bandslam 2007! It would be very sexy (and cool) to have the support of the RtCW community to help bring home some wins this year! As a gift for your efforts, I've included a link to download our most recent album, Diving Dreamers entirely for free. It's available on iTunes, CDBaby and Awarestore, but for your support, we're happy to provide you it for free.

Please show your support for my guitar hax by placing your ballot vote for Bill Carleton Band in the following categories:

-Best Original Rock

-Best Pop Rock

-Bill and Lee - Happy Hour Duo

-Dan Prindle - Best Bassist

-Bryan Kelly - Best Drummer/Percussionist

-Lee Sylvestre - Best Guitarist

-Tony Parlapiano - Best Keyboardist

Thanks so much! And if anything, let me know what you think of the album (good or bad feedback)!

You can also check out our music on MySpace and at www.billcarleton.com

-Ralph

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (37)

.:Back to Top:. |

|

|

|

| id Software announces new game engine |

| Posted on Friday, June 1, 2007 at 07:49:30 AM | [+ oo] rampage |

|

| |

http://www.gametrailers.com/viewnews.php?id=4696

By Eugene Huang

With releases for PC, PS3, and Xbox 360 scheduled for the end of this calendar year, Enemy Territory: Quake Wars now seems to be comfortably on autopilot. With this joint project between id Software and Splash Damage soon to be complete, it now seems as if id has been freed to work on yet another engine to be used with another brand new franchise.

At a London event earlier this week, id CEO Todd Hollenshead was the first to reveal the news to GamesIndustry.biz.

"We are working on an all-new franchise," he stated. "It's not Doom, it's not Quake, it's not Wolfenstein, it's not Enemy Territory, it's not even Commander Keen! It is a new id brand with an all-new John Carmack engine, and I think that when we show it to people, once again they'll see, just like they saw when we first showed Doom 3, that John Carmack still has a lot of magic left."

Since creating Doom 3, Carmack has had his hands full porting games such as Doom RPG and Orcs & Elves to mobile platforms. But in comparison to those relatively minor projects, this newly announced game would be their first major independently-developed title in nearly three years.

Hollenshead also states, however, that Carmack is still feverishly working on the engine, which he hopes will be used "across a wide range of applications and different games within [id's] suite of franchises". As it is still in its early development stages, Hollenshead was not at liberty to discuss details, but promises to make a formal announcement when the time is right.

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (17)

.:Back to Top:. |

|

|

|

| -[x]- End server party!!! |

| Posted on Tuesday, May 29, 2007 at 06:05:58 PM | -Tr0n- |

|

| |

Shrubapalooza (the last bastion from hackers and douche admins) Is going to be put to sleep in the morning of May, 30th, 2007.

This is an invite... from -[x]- to all of the people we've been playing with thru the years and new faces to come out and frag it up with us one last time.

Info

Time/Date : 8pm est/May 29, 2007

Place : Shrubapalooza (208.200.4.3:27960)

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (8)

.:Back to Top:. |

|

|

|

| Details On New Wolfenstein Development. |

| Posted on Saturday, May 12, 2007 at 10:15:08 PM | -doNka- |

|

| |

From Yahoo News:

The company is currently working on a new game based on the "Wolfenstein" series, and with newer motion-capture technology, it is working with a local actress to be the character in its movie.

...

The game won't come out until sometime in 2008, but the name and many other details about it are being kept secret, which is just one clue at how competitive this industry has become.

Full article

More about technology and C. Coon here

Thanks to Tosspot at ESReality for finding this. |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (3)

.:Back to Top:. |

|

|

|

| Some Crazy Internets Sh*t about Planet-RTCW and Wippuh.... |

| Posted on Thursday, May 3, 2007 at 12:38:23 AM | -doNka- |

|

| |

I've been trying to follow some leads from Planet-RTCW logs and something totally ridiculous caught my attention. Some half-dead site is bashing Wippuh for I quote, "attempting to fraudulently obtain money from the Wolfenstein community".

To sum up the content:

The author talks about a news item posted by Wippuh a while ago in which he asks for charity donations. Then author claims that Wippuh is a con man and explains why he thinks so.

Read full thing here: http://www.dhutchison.co.uk/wippuh.htm

The rest of the site is pretty entertaining too. |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (47)

.:Back to Top:. |

|

|

|

| BanksCon2007 |

| Posted on Friday, March 30, 2007 at 07:15:08 PM | .Banks |

|

| |

As alot of you know, last year, during BOB3, I had a lan/get together at my house. Several great friends and players showed up. Militant, Sage, Odin from arise, knight and I drank, and grilled, and lanned for two days straight. This year, during July, I wanna do the same thing again. Probably a long weekend type thing. For those of you that are interested, I live just north of Baltimore in Bel air(go to #banksbuddies on irc and pm me for more info). You can either p2p me, or call me on my cell anytime 443 910 0877. Name is Jason. Althought, most of you still call me Banks when you call me for some odd reason lol. This is nothing more, than just getting together, chillin, gettin a weeeeeee bit toasted, and talking bout things we all love. RTCW!!! |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (72)

.:Back to Top:. |

|

|

|

| Searching For Site Content. |

| Posted on Thursday, March 8, 2007 at 07:08:45 PM | -doNka- |

|

| |

NA.RTCW is having that time of the year when the activity is not as great due to filler time between competition seasons. There's simply not much to write in the times like these, so I'd like to turn to our small, but strong community for support. This is a good time to step out of the generally inactive crowd with some creativity and enthusiasm. Is there an article you always wanted to write to bring up a discussion? Some old screenshots saved on your hard drive? Some other unthinkable project ideas that have been eating you forever? It's a good time to submit them now. Just use your judgement and make sure that your news/article/POW is somewhat Wolfenstein related.(Please don't try to sell laptops here like spiza).

Banks have submitted an article, that I hope will not go unnoticed:

http://planet-rtcw.com/?page=articles&id=264

If you have some project in mind, but you're not sure if your effort will not duplicate someone elses existing/upcoming work you can talk to me or make a comment about it in most discussed news item(not sure who uses forums around here).

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (35)

.:Back to Top:. |

|

|

|

| Team HighBot would like their Battle for Berlin prizes |

| Posted on Wednesday, February 28, 2007 at 03:50:51 AM | DynoSauR |

|

| |

I have been in contact with a concerned humM3L of Team HighBot for more than the past two months and it's finally come to the point that he wanted me to publicly ask when they could expect to receive their hard earned prizes? I know that he has directly asked Mortal and I have as well and haven't gotten any kind of definite answer. Read more... |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (202)

.:Back to Top:. |

|

|

|

| Season Runnner Up - Warchild Under The Spotlight. |

| Posted on Saturday, February 24, 2007 at 09:32:21 PM | -doNka- |

|

| |

Looks like highlife/blur-warchild was busted with silo bot a day after the TWL OSP finals.

Here's a piece from Dyno:

I certainly didn't want to see this violation and was saddened when considering it's ramifications. I don't doubt that we will hear from warchild saying it was one of his other family members. I know we've heard this before and I would be inclined to believe warchild since his name has not been used with that GUID for a long time.

Read full post: http://www.wolfplayer.us/forums/viewtopic.php?t=269 |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (234)

.:Back to Top:. |

|

|

|

| Trinity is TWL OSP 13 Champion. |

| Posted on Thursday, February 22, 2007 at 05:56:09 AM | -doNka- |

|

| |

Trinity beat Highlife tonight in series of 4 games on Frostbite.

Round 1: Half round time set by trinity, Highlife beats it.

Round 2: Trinity holds 7:20 and caps

Round 3: Trinity Caps in 4:03, holds.

Round 4: Trinity full holds, caps.

Trinity: bigshot, cade, elusive, myth, robez, sucka

Highlife: Alien, ding, oot, ownage, silentstorm, tyson, warchild

I'd like to thank everyone who particapated in this season, especially TWL staff for keeping it together. Special mention to Gromit for quality announcements on planet-rtcw.

Stay tuned on next season announcement. I'd recommend EVERYONE who is interested to see naked wednesdays to last one more season to go and make your voice heard on TWL forums.

http://www.teamwarfare.com/forums/forumdisplay.asp?forumid=12 |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (91)

.:Back to Top:. |

|

|

|

| THURSDAY?!?!? |

| Posted on Sunday, February 11, 2007 at 08:05:58 PM | SyndicateGromit|TWL |

|

| |

I apologize for making a separate news article for this, but...

I REALLY NEED TO HEAR FROM RGR AND HL ABOUT WHETHER THEY CAN PLAY THIS THURSDAY (INSTEAD OF WEDNESDAY). I do NOT want to skip a week but I'm not going to force the teams to play on either Valentine's day (Wed) or on an alternative weekday (Thursday) without consent. As I've said in match comms and in private messages, I need to know TODAY whether RGR and HL are available (SS and TTT have said 'yes') or else I have no choice but to skip a week which sucks for everyone)

Email me at gromit@teamwarfare.com or post in match comms.

Thanks,

Gromit|TWL |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (21)

.:Back to Top:. |

|

|

|

| NiteSwine has made a statement. |

| Posted on Wednesday, February 7, 2007 at 07:53:14 PM | [a]streetz-work |

|

| |

Ive quoted this from teamwarfares forums.. NiteSwine wrote:

"I never said the door was closed on TWL sponsored leagues for RtCW. I did say we did not plan to continue, back-to-back or continuous leagues for RtCW. Options are available.

I will say I've taken some notice of various discussions and isolated activities within the community towards the development of options for continued RtCW competition. I'll admit I'm pleased by it. Maybe the real transition will be at the community level: with more activity and support emerging from the community, it may help justify future leagues and ladders hosted by TWL.

For a time, TWL will seek to encourage the community to put together options and explore new opportunities. I think this is a great opportunity for the community. If we (as a team or individuals) can help, ask us -- I'm sure you'll find us more than accomodating. I'll spend some time reviewing things as they develop, and as an admin team we'll decide when/if it's best to present another season here at TWL.

As for planet being the hub of all things RtCW, that's great. The place serves a purpose. Using it as a foundation for a venue intended for constructive discourse might be a challenge, though. Our forums are here, too. There's more expectation for contructive input here and less tolerance for ill behavior. If the community wishes to truly discuss it's options, our forums are available, too." |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (26)

.:Back to Top:. |

|

|

|

| Continuing RTCW Compeition... Continued |

| Posted on Saturday, February 3, 2007 at 05:11:45 AM | MUST B H4X |

|

| |

Well, after a few days of deliberation the old thread died quickly. I want to know if there is still enough support to continue RTCW Competition. There are currently 12 teams with a total of 152 players in the 13th season of TWL OSP (80%+ active). I also know that if this were to happen, I myself could probably get 5-10 old players to start playing again, as well as caffeine may join back up with the lack of TWL influence :D. I think if people are serious about this then we need to actually talk about it rather than sitting around thinking that it's just going to happen without any input from the community. That being said, if this were to happen, there are a few things needed to consider:

1. Admins. This is an obvious one, without someone managing the competition, there is no competition. I know that there are a lot of experienced players in this community that know the game, and people such as Mortal who set up the BFB tournament and would have a bit of knowledge in the field. As well as people such as Donka who (I assume) would be able to do a good job at maintaining a website. If you think you would make a good admin, post. Perhaps we could take a vote on all of the nominations and the top 5 would become admins? Maybe this league should have admins that are community-elected? As well, hakudushi said that he was willing to take on the challenge.

2. Infrastructure. As Donka suggested, there is the option of using Donka's Wolfenstock. Highone also suggested keeping the TWL infrastructure, however I do not think that would work out as well as hoped unless TWL had absolutely no control over it, and I don't see them agreeing to that.

3. Server configuration. I believe that at this time the current TWL OSP configuration is quite good, although if there are major concerns people can voice them here.

4. Servers. I know that Dark can supply a server (possibly two) if this league is to happen. How about you? Would many clans agree to keep paying for the servers that they have up? I don't think that the league would need to provide any servers seeing as most teams have their own.

5. And of course, money. Some people suggested that the league require a fee to play, but I do not think that it's a good idea. However, I have come up with a suggestion. Since this league would be 6v6, and it would be costly to award 6 prizes, maybe if one prize were donated it could go like this: The finals match is viewed on WTV, after the match, a poll is set up and viewers vote on who the MVP of the match was for the winning team. The MVP would then win the prize. However, there does not even have to be a prize, and I'm sure that a lot of people wouldn't find not getting a new mouse or something such a big deal.

With that being said, I think that there should also be a player cap. 12 people per team sounds sufficient, that gives room for 6 starters and 6 back-ups. The more teams the better.

Post any ideas, suggestions, comments, and support.

Oh and I couldn't think of a name for the league :O |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (235)

.:Back to Top:. |

|

|

|

| ET:QW Anytime Apr2007-Mar2008 |

| Posted on Friday, January 26, 2007 at 07:51:54 PM | -doNka- |

|

| |

From Activision press release:

"The company believes that its fourth quarter results will be significantly impacted by higher legal expenses relating primarily to its internal review of historical stock option practices, including expenses relating to the previously announced informal SEC inquiry and derivative litigation, and its decision to move the release of Enemy Territory(TM): QUAKE Wars into fiscal year 2008."

No jokez.

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (63)

.:Back to Top:. |

|

|

|

| TWL OSP the 13th: Week 8 UFO |

| Posted on Thursday, January 25, 2007 at 10:03:46 PM | SyndicateGromit|TWL |

|

| |

TeamWarfare OSP 13 League

The Playoffs start next week so let's start aiming for the head.

Week 8 will be played Jan 31:

Week 8 matchups

Syndicate.Soldiers v. Xtreme.$oldiers

TheJollyRGR [:x] v. Animate.gaming

Team_Trinity v. The Pain Train

Point.Blank v. CriticaL IMPulse-

|DaRk| v. Total Havoc

High Life v. Cross:+:Breed

Default Date and Time: Wed night, JANUARY 31, 2007 @ 10:00pm EST.

Week 6 Map: TE_UFO

Deadlines:

Teams and rosters are locked.

PLAYOFFS:

I suspect that we will go to an eight (8) team playoff this season. The main reason for this is that with only 12 teams at this point (last season we had 16), having every team make the playoffs would require giving 4 byes the first week. Rather than forcing the top 4 teams to wait 2 weeks between matches, I think the more reasonable thing is to drop the lowest 4 teams from the playoffs.

I am, however, willing to bend to the will of the league. Anyone who strongly favors a 12 team playoff, please post here or otherwise message me.

Assuming an 8 team playoff picture, this is a VERY important week as all but 3 teams have their final rankings up in the air. glhf.

TWL|Gromit |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (49)

.:Back to Top:. |

|

|

|

| Continuing RtCW Competition |

| Posted on Thursday, January 18, 2007 at 04:56:15 PM | Mauler [c0ff] |

|

| |

To those with interest:

After reading up on the TWL forums about the end of RtCW, it seems there is still quite a bit of interest. If I can get enough people interested, I'm willing to organize and host RtCW myself. I think the easiest way to determine this is through email.

Anyone who can field a team of 6+ people, please send me an email with your name and everyone who would be on your roster: rtcw@c0ff1.com

My long term goal is for this to start small and build up over time. When/if we build enough interest (or even for the first season if we have 8+ teams) I'm happy to do a pay-to-play system in order to keep people competitive. 8 teams x 6 players x $10 = $480 minimum, which comes out to $80 per player. If it grows we can talk about more options.

Depending on what I hear from people, I may organize something in the form of a website or forum in order to discuss the concept further. |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (67)

.:Back to Top:. |

|

|

|

| TWL RTCW OSP 13: Week 5 |

| Posted on Friday, January 5, 2007 at 04:08:58 AM | SyndicateGromit|TWL |

|

| |

TeamWarfare OSP 13 League is 1/2 way to the playoffs. Week 5 wil be played Jan 10 and features the following matchups:

Week 5 matchups

Syndicate.Soldiers v. |DaRk|

TheJollyRGR [:x] v. High Life

The Pain Train v. CriticaL IMPulse-

Cross:+:Breed v. Point.Blank

Team_Trinity v. Total Havoc

Animate.gaming v. No Medics Necessary

WANsanity v. Team-Lethal Injection

deathrow.rtcw v. Xtreme.$oldiers

PLEASE REMEMBER that the rosters lock on Jan. 9, 2007 at 11:59pm est.

Default Date and Time: Wednesday night, JANUARY 10, 2007 @ 10:00pm

Week 4 Map: BASE

Revised OSP League Config:

http://www.teamwarfare.com/forums/showthread.asp?forumid=12&threadid=340569

The changes are few:

OSP - Universal Config - League and Power Ladder

- Config file renamed from o_lg.cfg to o_twl.cfg

- cl_timenudge changed from in -50 0 to in -20 0

- g_log changed from o_lg.log to o_twl.log

Deadlines:

Roster Lock: Jan. 9, 2007 at 11:59pm est

Rules:

http://www.teamwarfare.com/rules.asp?set=Return+to+Castle+Wolfenstein+League

Please note one significant change to the league scoring system. This season, forfeit losses will result in -1 league point. In the past, forfeit losses were scored 0 points:

Wins - Credited 3 League Points, 1 Win, and Rounds Won/Rounds Lost

Byes - Credited 3 League Points and 1 Win

Forfeit Win - Credited 3 League Points and 1 Win

Loss - Credited 1 League Point and Rounds Won/Rounds Lost

Forfeit Loss - Debited 1 League Point

Happy New Year, everyone.

TWL|Gromit

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (39)

.:Back to Top:. |

|

|

|

| New Poll - Sensitivity. |

| Posted on Thursday, December 21, 2006 at 07:34:54 PM | -doNka- |

|

| |

This topic will not die even when everyone switches to consoles. Mouse/controller sensitivity is a very personal preference that takes sometimes days to get used to and only seconds to lose. This is one of the most addressed variables of all times on all forums in most mouse related games.

Do you know what your sensitivity is? No everybody realizes that sensitivity is not just a number that you type in your console.Read more... |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (41)

.:Back to Top:. |

|

|

|

| You Got Mice! |

| Posted on Wednesday, December 13, 2006 at 07:16:35 AM | -doNka- |

|

| |

There is one article on the internets that has been raging the ratings in the IT world for the last couple of days. Sujoy, the father of THE esports site ESReality.com, posted an amazing research on benchmarking computer mice. This is one of the most "sensitive" topics in the gaming world, so I'm sure most of you will relate to it.

Picking out the best mouse is a tricky business because we have to combine different benchmark scores. It's like judging cars where each one has a 0-60mph time, a top speed, and different suspension. A Formula 1 car might be perfect for racing at Monza, but won't do so well in a cross country rally. I've combined all of the mouse results by taking them as a factor of the top performer.

Read entire article HERE |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (63)

.:Back to Top:. |

|

|

|

| Possible Shrub Season?? |

| Posted on Friday, December 1, 2006 at 07:07:04 AM | [a]str33tz |

|

| |

Niteswine posted on TWL Forums shortly after SS won the season.

http://www.teamwarfare.com/forums/showthread.asp?forumid=52&threadid=341678

Congrats to SS on another win, and also to SeaDawg on another season. Tried some new things this last go'around, and it seemed to work out pretty well.

So, let's talk about a potential 7th league for this mod. Is there enough interest? I'm inclined to delay starting anything before the 1st, but we can determine interest-level and set up some parameters.

From the hip, I'm leaning towards a standard maps season, using the following parameters:

* No-shows will result in a -1 point, applied to their league point accumulation.

* Any team with less than 6 league points at the end of the 8 week regular season will not be advanced to the playoffs.

* There are 8 weeks in the regular season. All teams should earn at least 8 league points. A team that plays 7 matches, but no-shows on a single match will end up with only 6 league points.

* A team that no-shows more than 1 time during the regular season will be unable to participate in playoffs.

* Beginning after the second week of regular season, any team having a negative league point value throughout the remainder of the regular league will be removed from the league.

* A team joining at mid-season will need to win at least one match in order to earn 6 or more league points -- call it a risk for joining late -- so, join now! |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (21)

.:Back to Top:. |

|

|

|

| TWL RTCW OSP 13: Week 2 |

| Posted on Thursday, November 30, 2006 at 06:44:09 PM | SyndicateGromit|TWL |

|

| |

TeamWarfare OSP 13

Week 2 matchups

Animate.gaming v. Cross:+:Breed

High Life v. Syndicate.Soldiers

Team_Trinity v. WANsanity

Critical Impulse- v. The Jolly RGR

The Pain Train v. Xtreme.$oldiers

|DaRk| v. No Medics Necessary

Point.Blank v. Total Havoc

Xtreme $trike Force - XSF is the only remaining team which forfeited last night. XSF is getting a bye, but must send me a ss of at least 5 of their players on a server (with GUIDs revealed) at matchtime next Wed. If I do not receive this ss by 11:59pm next Wed, they will be kicked from the league.

Default Date and Time: Wednesday night, DECEMBER 6, 10:00pm EST.

Week 2 Map: ASSAULT

Revised OSP League Config:

http://www.teamwarfare.com/forums/showthread.asp?forumid=12&threadid=340569

The changes are few:

OSP - Universal Config - League and Power Ladder

- Config file renamed from o_lg.cfg to o_twl.cfg

- cl_timenudge changed from in -50 0 to in -20 0

- g_log changed from o_lg.log to o_twl.log

Deadlines:

League Lock: Wed., Dec. 20, 2006 at 11:59pm est

Roster Lock: Jan. 9, 2007 at 11:59pm est

Rules:

http://www.teamwarfare.com/rules.asp?set=Return+to+Castle+Wolfenstein+League

Please note one significant change to the league scoring system. This season, forfeit losses will result in -1 league point. In the past, forfeit losses were scored 0 points:

Wins - Credited 3 League Points, 1 Win, and Rounds Won/Rounds Lost

Byes - Credited 3 League Points and 1 Win

Forfeit Win - Credited 3 League Points and 1 Win

Loss - Credited 1 League Point and Rounds Won/Rounds Lost

Forfeit Loss - Debited 1 League Point

Gromit|TWL |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (102)

.:Back to Top:. |

|

|

|

| RTCW Week: OSP 13 Starts. Info and Preview. |

| Posted on Sunday, November 26, 2006 at 11:13:06 PM | -doNka- |

|

| |

This week Shrub league will pass the flag to OSP. OSP matches start Wednesday night, Nov 29th and will continue till late february. So shake off that turkey fat you've accumulated during the weekend, wash off the gravy from your chin and get ready for the new season! It's been quite a while since OSP 12 season ended, so I'm sure people are already itching for some action.

Currently Read more... |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (26)

.:Back to Top:. |

|

|

|

| Celebrating the Big 5! |

| Posted on Monday, November 20, 2006 at 07:10:51 PM | -doNka- |

|

| |

MESQUITE, Texas - Nov. 20, 2001 - Retail outlets are bracing for the blitzkrieg with the announcement that id Software's Return to Castle WolfensteinTM for the PC is now available. Activision, Inc. and id Software confirmed today that the title has been shipped to retailers and will be available on November 20 for a suggested retail price of $49.99.

Today we're celebrating 5 years since RTCW hit the shelves back in 2001. That's been exciting time for all of us and there's more to come. During that time frame people grew, finished schools, graduated from universities, got and changed jobs, married, had kids. It's a lot of time if you try to go back and remember what happened to you while you were actively involved into RTCW scene.

Let us have a 5 years anniversary celebration OSP pub tonight at Hell Gate 1 with stats and your old friends. Attach [5] at the end of your nickname. Please, let your friends know who you are by showing up with your real nickname.

Please make sure your friends know about it. Notify them via MSN, AIM, Xfire if you wish.

Tentative start at 10pm EST. |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (16)

.:Back to Top:. |

|

|

|

| Battle For Berlin - Tournament Championship Match |

| Posted on Friday, November 17, 2006 at 11:32:39 PM | Mortal :o |

|

| |

Battle For Berlin is proud to present the last match in the tournament, the tournament championship match!

.gif) Hostile Inc vs. Hostile Inc vs.  HighBot on Ice tonight! HighBot on Ice tonight!

Hostile Inc is made up of Slayaya (fX, opp), Remedy (wSw, fX), Rambo (-a-, fX) and their backup Serum (fa, -Tv-). They are a solid team, with alot of experience with each other.Read more... |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (26)

.:Back to Top:. |

|

|

|

| Battle For Berlin: Second Round |

| Posted on Monday, November 6, 2006 at 09:03:34 AM | Mortal :o |

|

| |

Battle For Berlin proudly presents the second round of tournament matches!

The third day will encompass the first 8 Winners Bracket Matches, and the forth day will encompass the first 8 Losers Bracket Matches. Both Winners and Losers Bracket Matches will be played on Base.

We should have some coverage from BFBRadio (DJ: Alp!ne), Radio-iTG (DJ: Trillian, DeeAy, Vradi), and WolfTV (Cam: Digita|, Xero, PissClams, etc.Read more... |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (29)

.:Back to Top:. |

|

|

|

| SmokeH3rb OLTL XSTAB |

| Posted on Saturday, November 4, 2006 at 06:26:01 AM | [a]str33tz |

|

| |

[A]nimate.Gaming is proud to announce the revival of SmokeH3rb.

For those that don't know, BULL-Z and STR33TZ decided to open up SmokeH3rb, a former OLTL XSTAB Pub. This server will be located in CHICAGO and features a 26 slot server + 4 private slots.

Now I know what everyone is wondering, what makes this OLTL XSTAB server different than the rest? Well for starters, the location is Chicago.Read more... |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (18)

.:Back to Top:. |

|

|

|

| Shrub Playoffs Week 1 Results |

| Posted on Friday, November 3, 2006 at 06:41:05 AM | [a]str33tz |

|

| |

Results for the first round of playoffs are

syndicate vs BYE

-ASC- vs .:. 0-3 Assault, UFO, Frostbite

lamp-vs [op:4] 3-2 (Lamp refuses to tell us what maps were used)

-[x]- vs Dark 3-2 Axis_Complex, Assault, Beach

[a] vs rage 3-0 Assault, Ice, Base

-TAO- vs =nMn= 2-3

:+: vs -Tv- 0-3 Assault, Rocket

(!) vs :) 3-0 Base, Beach, Keep

Good luck to all teams moving on to round 2 of playoffs.

|

Edit this News Post | Delete this News Post

Submit News |

Read Comments (14)

.:Back to Top:. |

|

|

|

| Shrub League Round 1 of Playoffs |

| Posted on Friday, October 27, 2006 at 06:40:36 PM | [a]str33tz |

|

| |

Season 6 will be featuring a new way of picking playoff maps...

1. Higher rated team will select first map, which will be used for the first round

2. Team losing the first round will select a map for use in the second round

3. Team losing the second round will select a map for use in the third round

4. Team losing the third round (if match is not set) will select a map for use in the forth round

5. Team losing the forth round (if match is not set) will select a map for use in the final, fifth round.

Matchups are as follows

syndicate vs BYE

-ASC- vs .:.

lamp-vs [op:4]

-[x]- vs Dark

[a] vs rage

-TAO- vs =nMn=

:+: vs -Tv-

(!) vs :)

Good luck to everyone. Let the predictions begin.

Remember please check your stocks, vote on MOTW and vote for stock predictions. |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (25)

.:Back to Top:. |

|

|

|

| BFB Tournament only days away! |

| Posted on Thursday, October 26, 2006 at 05:42:26 AM | [a]str33tz |

|

| |

With Battle For Berlin only days away, lets take this time to be active on the osp servers.

Hells Gate #1

and

Hells Gate #2

Are up and running. Thanks to Donka, since 10:00 PM est. and on through the night, Hells Gate 1 has been full of pubbers. I personally took the time to get my shot back and prepare for BFB. Lets stay active until OSP season 13 starts!!!Read more... |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (4)

.:Back to Top:. |

|

|

|

| Forever Rage |

| Posted on Friday, October 20, 2006 at 03:52:04 AM | Merlin@toR |

|

| |

Earlier this year Marcus Welfare was swept out to sea on a family trip with his family, shortly afterwards I decided to make a movie, with a lot of help, as a tribute to such a well liked player and one of the few public server characters-

Forever Rage

http://www.own-age.com/vids/video.aspx?id=9394 own-age movie page, swertcw and filefront links and other posted mirrors can be found here-

please note when watching that all frags are from limbo spectating and/or spectators on the server- none are from Rage's original POV

Thanks- |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (17)

.:Back to Top:. |

|

|

|

| TWL RtCW - New Ladder Competition |

| Posted on Thursday, October 12, 2006 at 04:45:30 PM | NiteSwine|TWL |

|

| |

TWL RtCW will be phasing out standard competition ladders due to inactivity. While policy suggests/recommends completely dropping ladder competition, the admin staff for this particular game wishes to provide an option we hope will benefit the community: Power Ladders.

Power ladders are different than competition ladders in many ways:

1. Read more... |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (9)

.:Back to Top:. |

|

|

|

| Shrub Night Tonight |

| Posted on Thursday, October 5, 2006 at 07:53:15 PM | -doNka- |

|

| |

Tonight's the special night for shrub. First time since ..ever TWL Shrub league will have it's own predictions and stock sections set up. You can use the links below to use the site features associated with TWL shrub league matchups.

View Upcoming Matches

View/Manage Stocks

Post Predictions

Notice that Richest Stock Traders section was modified to display only active users. Let me point out that it's the best time ever to bet your money since the OSP playoffs are over and Shrub teams are starting fresh. You have several hours before stocks and predictions lock themselves down for the rest of the night.

There were several questions about MOTW section of the site. It allows users to vote for their favorite matches, but it currently uses different algorithm to determine MOTW. It goes by the closest user predictions, not by the votes. I will think what could be done about it in the nearest future.

There are currently 15 teams in shrub season, and OSP season will be coming up soon.(this is actually up to TWL admins). Even though things always look a little slow between seasons there are still people who want to play league matches and pubs after having some rest. Make sure you tell your RTCW and ET friends about current activities and let them know what's coming up.

We still have two major pub servers from =Fedz=

Hells Gate 1

Hells Gate 2 |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (8)

.:Back to Top:. |

|

|

|

| Interview with TosspoT of Radio ITG / Crossfire |

| Posted on Tuesday, October 3, 2006 at 05:46:25 AM | Mortal :o |

|

| |

I recently sat down with TosspoT, shoutcaster for Radio ITG and administrator with Crossfire. The result was quite an interesting interview.

TosspoT has accomplished much in his time in the European community, with him being apart of one of Europes largest community sites, a vast archive of shoutcasts for matches in ET, RtCW, CoD, Warcraft, and more!, and quite an interesting clan history.

He's a great guy, and I hope you enjoy the interview!

Interview: http://www.planet-rtcw.com/?page=articles&id=249 |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (8)

.:Back to Top:. |

|

|

|

| TWL Season 12 Stocks Winners |

| Posted on Monday, October 2, 2006 at 06:47:01 PM | -doNka- |

|

| |

Stocks were brought back to life at the beginning of this past TWL-12 season. Cash amounts for all users were reset and the behind-the-scenes competition started. 73 users made predictions and purchased stocks in a race for the title of the most accurate e-sports analyst in RTCW discipline. The results are in.

Top 3 E-Sports Analysts in RTCW discipline are:

1. crapshoot - $23,609.70

2. w0rd* - $19,370.71

3. /blur/Lloyd - $18,642.85 |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (18)

.:Back to Top:. |

|

|

|

| Greetings from Alias |

| Posted on Friday, September 29, 2006 at 02:03:28 AM | gisele |

|

| |

As many of you are aware, Kevin "Alias" Newman quit the internets for real-life Return to Castle Wolfenstein a few months ago when he joined the U.S. military. Both Sage and I have since received letters from him, acknowledging that he is indeed alive and well!

Alias want's everyone to know that he misses us all and says "hi.Read more... |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (43)

.:Back to Top:. |

|

|

|

| Battle For Berlin - 11 slots left... |

| Posted on Sunday, September 17, 2006 at 03:17:29 AM | Mortal :o |

|

| |

Hello Planet-RtCW,

Battle For Berlin is going in the right direction with some new additions to the team list, map testing in-progress, and CVAR/rules being finalized soon.

We are still looking for 3 more EU admins to help out the tournament, aswell as 1 NA admin. If anyone is interested please contact me asap.

There are only 11 spots left out of 32, with each day 2-3 more teams sign up. Please sign up asap to hold a spot for your team for the tournament. Send a "draft" with your team name, 3 players + guids, and a team leader email (all which can be changed prior to the tournament) asap.

More info on the tournament:

Battle For Berlin News

Battle For Berlin Team Registration

Battle For Berlin Game Rules

Battle For Berlin Forums

Thanks for taking interest in Battle For Berlin! For detailed listings on our prizes, sponsors, rules, brackets, and more; please visit our website. Thanks and enjoy the tournament!

Zach "Mortal" Hooper

Battle For Berlin Tournament Director |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (20)

.:Back to Top:. |

|

|

|

| Sites Hacked |

| Posted on Saturday, September 16, 2006 at 06:28:32 AM | CyberKnight |

|

| |

Sometime today all the websites on the network including 100's of others got hacked. All the websites delete, everything.

I got the most current database I had on me atm which was from Sept 10th, its a bit old. But it is better then starting out fresh.

Sorry for the problems, the website may not be stable yet. But we are working on it. |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (10)

.:Back to Top:. |

|

|

|

| Shrub Season 6 Week 1 on Assault |

| Posted on Monday, September 4, 2006 at 07:38:45 PM | [a]streetz-work |

|

| |

Week ones matchups which were made by we happy few's trinners will feature the following teams....

-[x]-Treme v We Happy Few

Squad Bravo v The Pain Train

Cross:+:Breed v The Awesome Ones

-Dark- v Team Hostility

I Love Lamp v Team Seven Sins

No Medics Necessary v Syndicate-Soldiers

onslaught v Opposing Forces RTCW

These teams did not receive a match due to not having enough players on the roster at time of posting.

D-Up

team trinity

Team Untouchable

The swat guys |

Edit this News Post | Delete this News Post

Submit News |

Read Comments (39)

.:Back to Top:. |

|

|

|

| Battle For Berlin - We need Testers, Admins, and your opinion! |

| Posted on Sunday, September 3, 2006 at 12:01:21 PM | Mortal :o |

|

| |

Battle For Berlin needs your help! We are looking for admins to help oversee matches, help spread the word about tournament updates, and provide their personal expertice as needed.

We are also looking for teams to test custom maps. We have quite a few selected that have passed the initial admin testing, but want to see what a team of competative players thinks of it. We currently need 2 more teams.

We also want your opinion on anything and everything! We want to know what CVARs you want, what maps you want, and what we should do to make the tournament better. Any of these can be posted at any time in our forums.